Pruning in Snowflake: Working Smarter, Not Harder

Abstract

Modern cloud-based data analytics systems must efficiently process petabytes of data residing on cloud storage. A key optimization technique in state-of-the-art systems like Snowflake is partition pruning—skipping chunks of data that do not contain relevant information for computing query results.

While partition pruning based on query predicates is a well-established technique, we demonstrate that its scope can be extended to LIMIT, top-k, and JOIN operations, dramatically expanding the opportunities for pruning across diverse query types.

Our analysis of Snowflake's production workloads reveals that real-world analytical queries exhibit much higher selectivity than commonly assumed, yielding effective partition pruning. We show that we can harness high selectivity by utilizing only min/max metadata available in modern data analytics systems and data lake formats like Apache Iceberg, reducing the number of processed micro-partitions by 99.4%.

Highlights

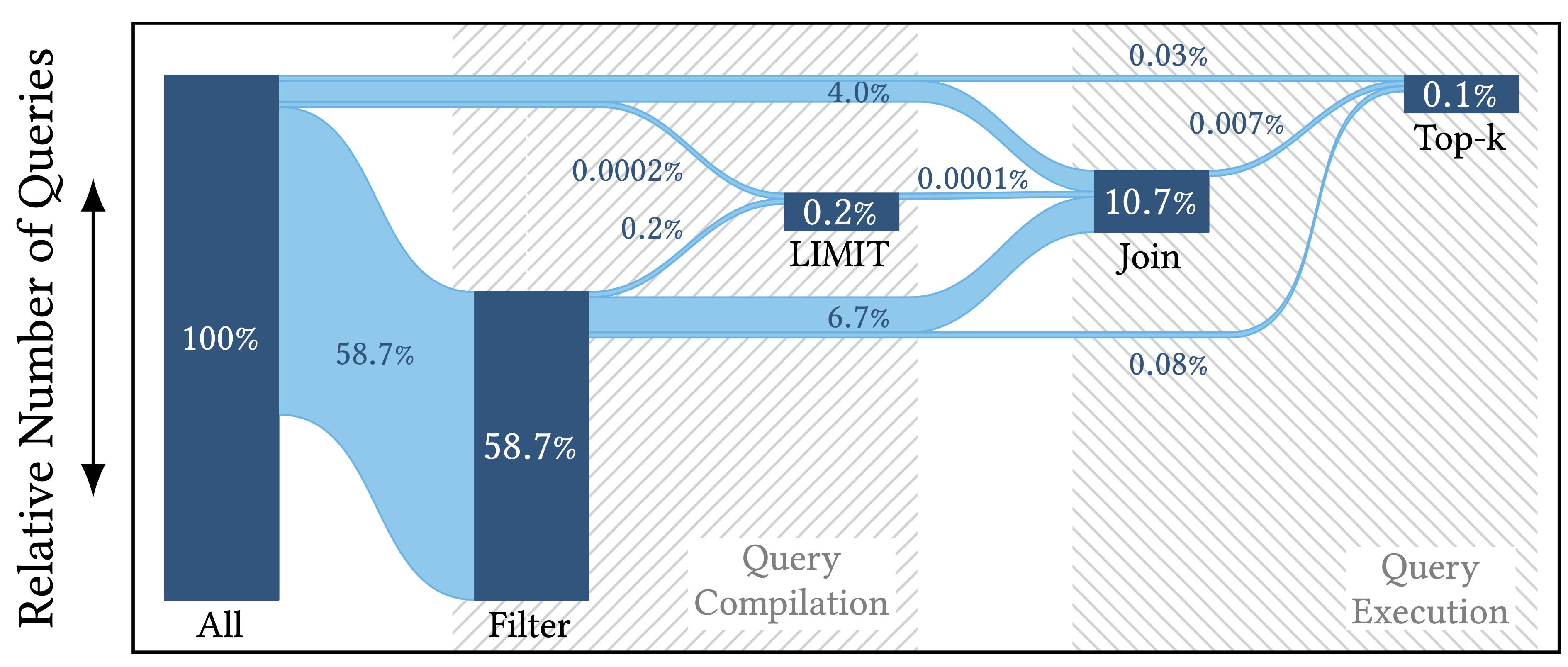

We presented four data pruning techniques that are used in the Snowflake data platform and evaluated them based on their pruning effectiveness on real-world customer workloads. The pruning flow is as follows:

- Filter Pruning: All queries containing a

WHEREpredicate are subject to filter pruning, which removes data partitions by identifying the ones that do not contain any rows that would match the filter condition in theWHEREclause. - LIMIT Pruning: If the query is a simple

LIMITquery without anORDER BY, the query engine tries to reduce the number of partitions to the minimally necessary set of partitions such that the query result can be computed. This works for queries with and withoutWHEREclause. - Join Pruning: While executing a join, the probe side of a join is subject to join pruning, i.e., removing partitions from the probe side that do not contain join partners based on the collected values from the build side.

- Top-K Pruning: Lastly, if a query is a top-k query, i.e., it contains an

ORDER BY ... LIMIT, a specialized top-k pruning technique attempts to reduce the number of processed partitions by pushing runtime information about the current top-k heap down to the table scan.

While the first two techniques can be applied during query optimization, the latter two techniques require runtime information about the current state of the query execution.

The pruning flow can be visualized as follows:

Note that the figure contains distributions across all query types, including DML queries. The distribution of SELECT-only queries might be different as certain operators might be seen more often—e.g., DML queries tend to not contain LIMIT clauses and might have fewer joins.

As in interesting side-remark, we also objectified that it makes sense to optimize for small LIMIT k by looking at the relative distribution of of k across production queries:

Related Links

- The blog series "Say Hello to Superfast Top‑K Queries in Snowflake", "Continued Investments in Price Performance and Faster Top‑K Queries", and "Reimagining Top‑K Aggregation Optimization at Snowflake" focuses on optimizations regarding pruning capabilities of Top‑K queries in Snowflake.

- The blog post "Pruning for Iceberg: 90% of an Iceberg Is Underwater" elaborates on pruning in Snowflake on Apache Iceberg tables.

Follow-Up Work and Scientific Discourse

No follow-up work has been published yet. Please reach out to the main author of this paper if you think your work should be included here.Cite this Paper

@inproceedings{Zimmerer2025Pruning,

title = {Pruning in Snowflake: Working Smarter, Not Harder},

author = {Zimmerer, Andreas and Dam, Damien and Kossmann, Jan and Waack, Juliane and Oukid, Ismail and Kipf, Andreas},

editor = {Volker Markl and Joe Hellerstein and Azza Abouzied},

booktitle = {Companion of the 2025 International Conference on Management of Data ({SIGMOD}-Companion '25), June 22--27, 2025, Berlin, Germany},

publisher = {{ACM}},

year = {2025},

url = {https://doi.org/10.1145/3722212.3724447},

doi = {10.1145/3722212.3724447},

}